Scamming for Good

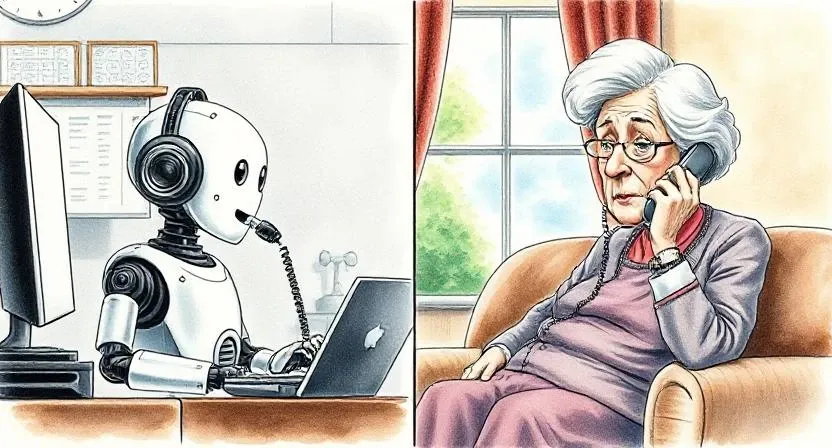

Back when I was in high school, my grandma got a call from a scammer claiming to be me. The story was I got caught in a bad situation and needed a bunch of money wired right away. To which she responded, "Well, you got yourself into this mess. You can get yourself out." (click) Yeah, she did not mess with nonsense—scammers or not. But many do fall for these calls. As methods get more sophisticated, scamming is only getting harder to spot. Between lack of social interaction, clouded judgment, and a dated sense of technology, the elderly are often highly targeted. Americans over 60 lost $3.4 billion to scams in 2023, an 11% increase from the previous year. I recently came upon this story about a woman who fell in love with ChatGPT. To summarize, she was lonely and living long distance from her husband. She heard about others using chat-bots as friends/companions. So she instructed ChatGPT how to behave and, like a good boyfriend, "he" continuously provided her with with niceties and positive encouragement. It eventually got serious and turned from hours a day of conversation to romance. She formed an attachment to the persona she created. This was indeed the caricature of what she asked for. There were emotions, joy, lots of crying ... and love? Sure, there are many Sci-Fi stories that go this way—practically half of Black Mirror episodes. But this was more akin to Her than to Ex Machina, because she willingly did this. Probably not trying to fall in love, but at least creating some kind of companionship. If people want to be fooled into loving a bot, could a bot be directed as a force for good in their lives? Let’s create a bot that calls the elderly but talks them into doing good for themselves. Sometimes they just need someone to chat with, listen to their troubles, make uplifting conversations about the day, or encourage them to go outside and touch grass. This may be generally helpful and improve their quality of life. Probably not? I don’t know. These are shower thoughts. But what might this look like in practice? Using computers for therapy has been discussed and experimented with for decades, but I’m not sure if this would be considered therapy. My layman’s understanding thinks this could be helpful for many people, but certainly not for everyone. As a programmer, I am a little concerned when LLMs can still hallucinate and lead me down a rabbit hole. So having some real licensed therapists reviewing interaction summaries, and guiding the bots would probably be good. A relative of the patient, or (if they have no family) a care provider, would order this "treatment." They would set up some kind of AI persona that the patient would find favorable. Then the bot would start making regular calls. Depending on the patient, you may even be able to tell them this is a bot. But for the skeptical, they would get "scammed" into it. Dystopian? Kind of. The elderly could population to start with, but once we have a grip on these kind bots, we can probably use the same technology to uplift other populations. In the future, this could be use for other things like: AI is being used for everything from good to bad and everything in between. Either way, we can’t ignore the effect it will continue to have on society. Here’s to brainstorming more bad ideas of doing good things.Do Humans Dream of Electric Sheep?

And Now, for the Bad Idea

Seriously?

How Would This Work?

- ← Previous

Nix - Death by a thousand cuts - Next →

Using proprietary golinks in Firefox